10:00

Ethics

Lecture 23

Duke University

STA 101 - Fall 2023

Warm up

Announcements

- Course evaluations - On DukeHub

- TA evaluations - Email from Dr. Joan Durso

Misrepresentation

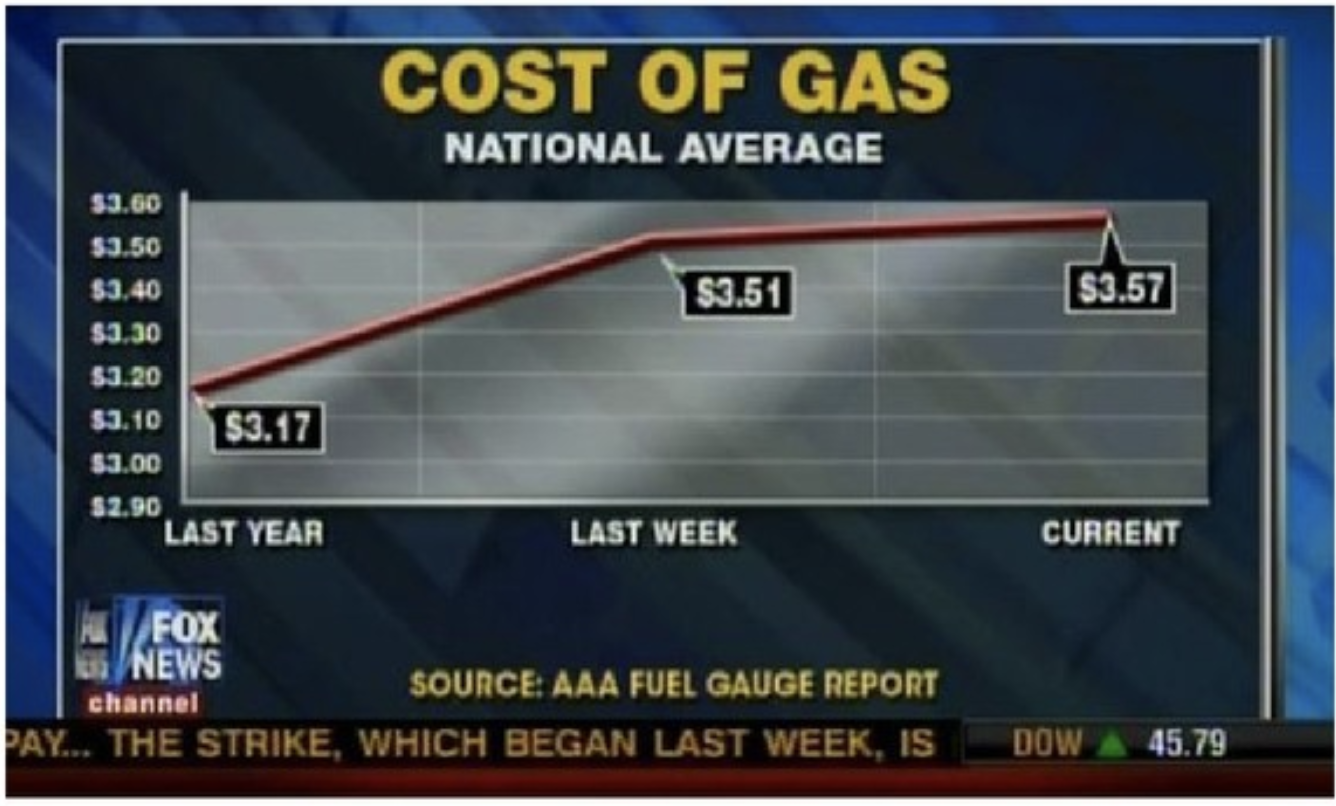

Cost of gas

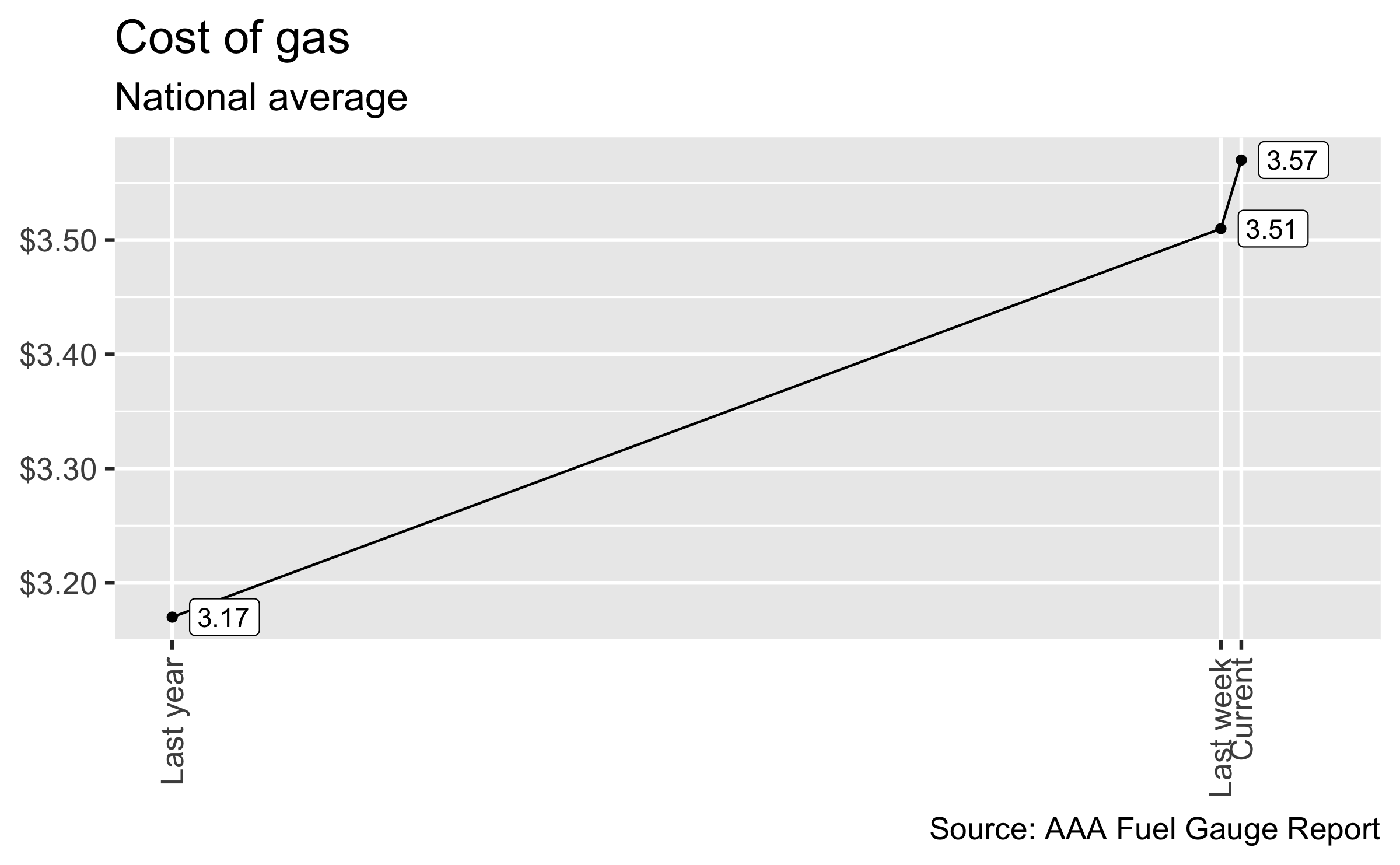

Cost of gas - revisit

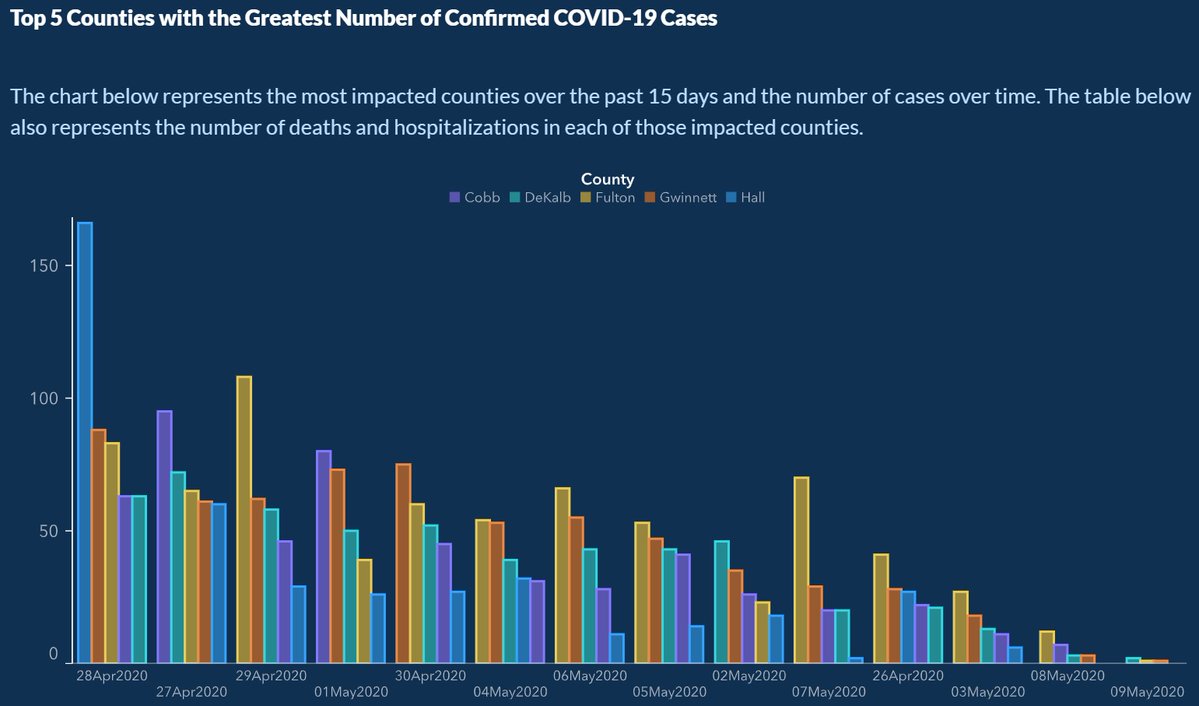

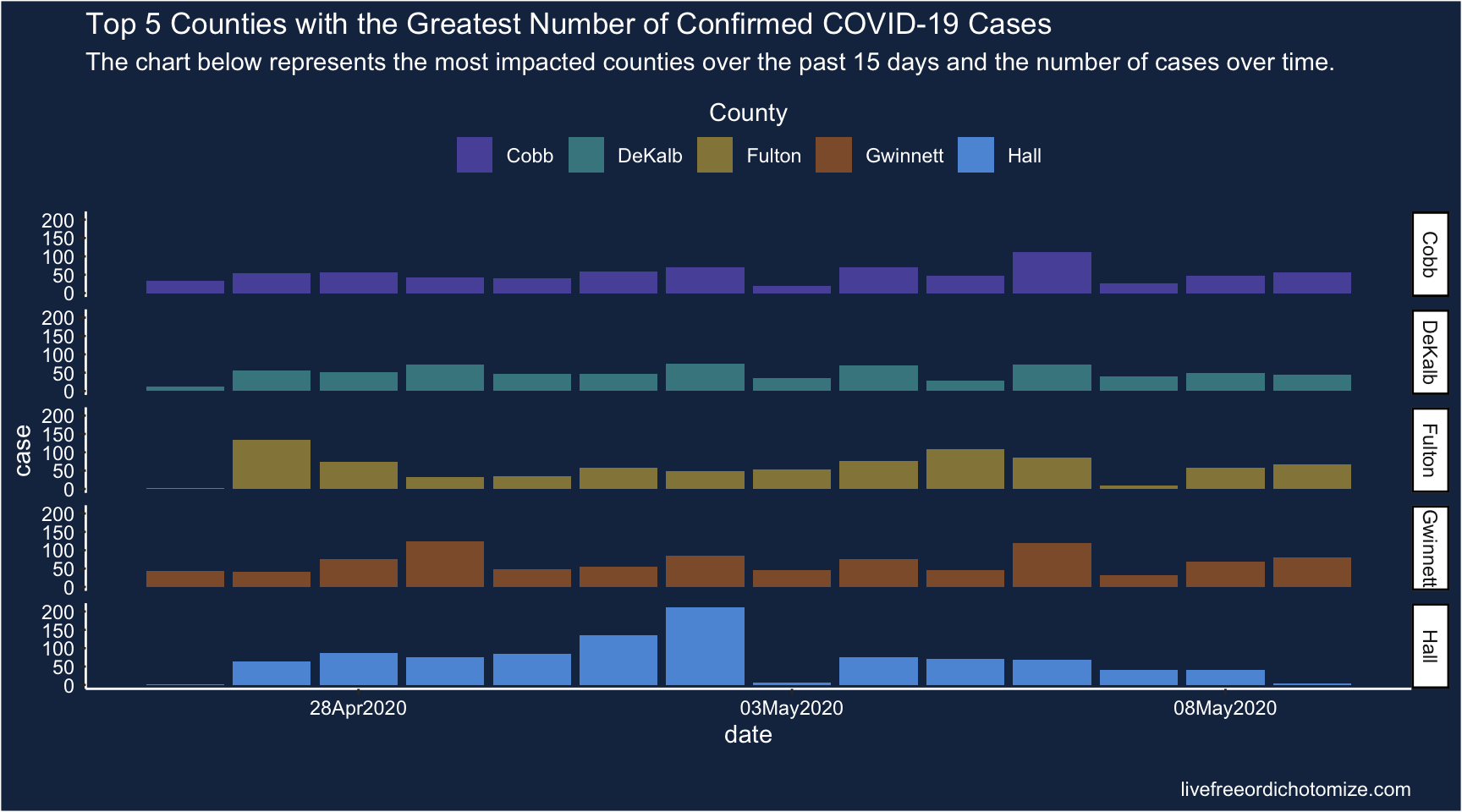

COVID-19 Cases

What is wrong with this graph? How would you correct it?

COVID-19 Cases - redo

Brexit

What is the graph trying to show?

Why is this graph misleading?

How can you improve this graph?

Exercise and cancer

Exercise Can Lower Risk of Some Cancers By 20%

People who were more active had on average a 20% lower risk of cancers of the esophagus, lung, kidney, stomach, endometrium and others compared with people who were less active.

Exercise and cancer - another look

Exercising drives down risk for 13 cancers, research shows

[…] those who got the most moderate to intense exercise reduced their risk of developing seven kinds of cancer by at least 20%.

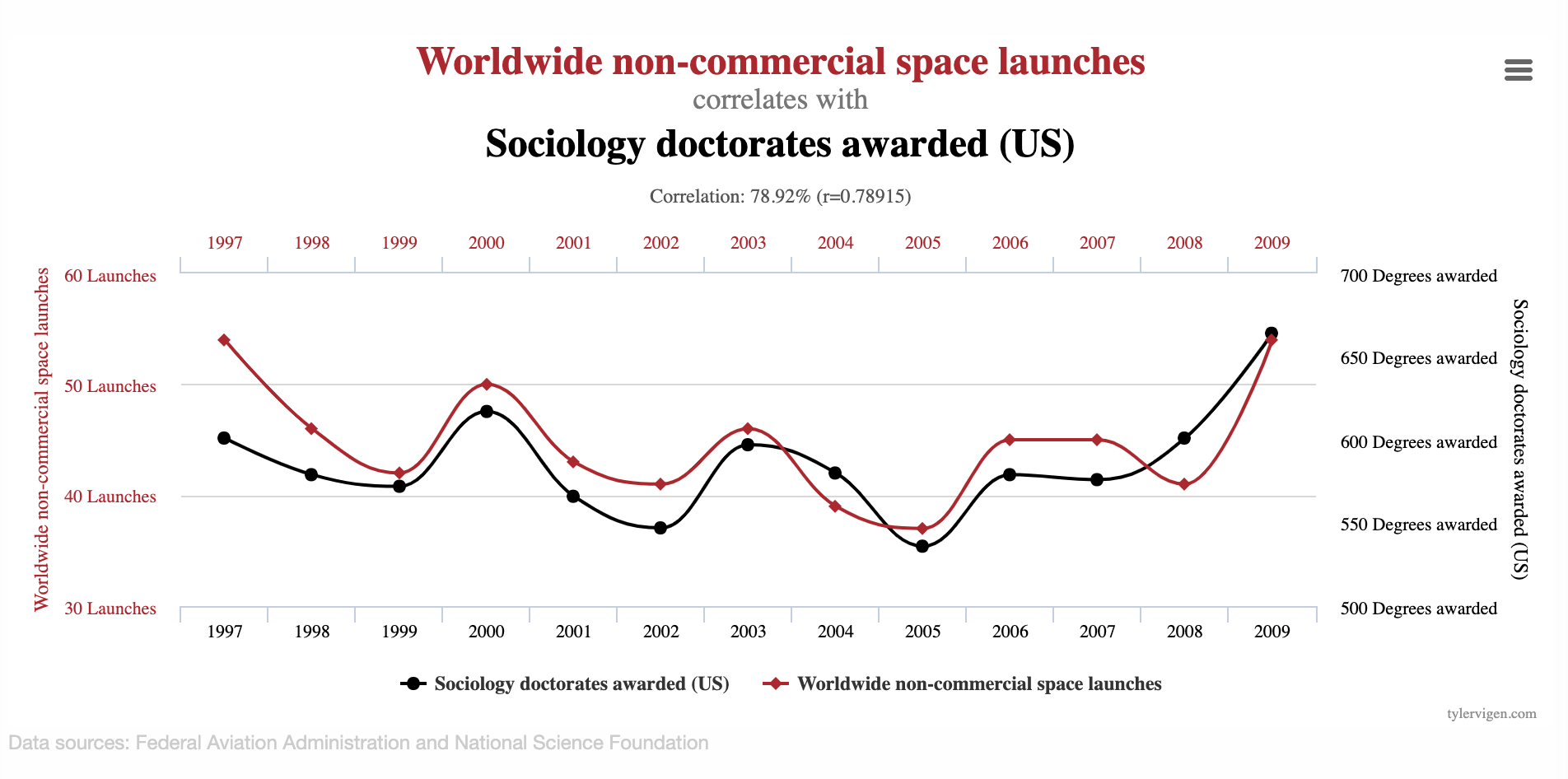

Spurious correlations

What does this graph show?

Work with your neighbor to write a misleading news article title based on this graph. (Submit on canvas as today’s check in. Access code:

spurious)

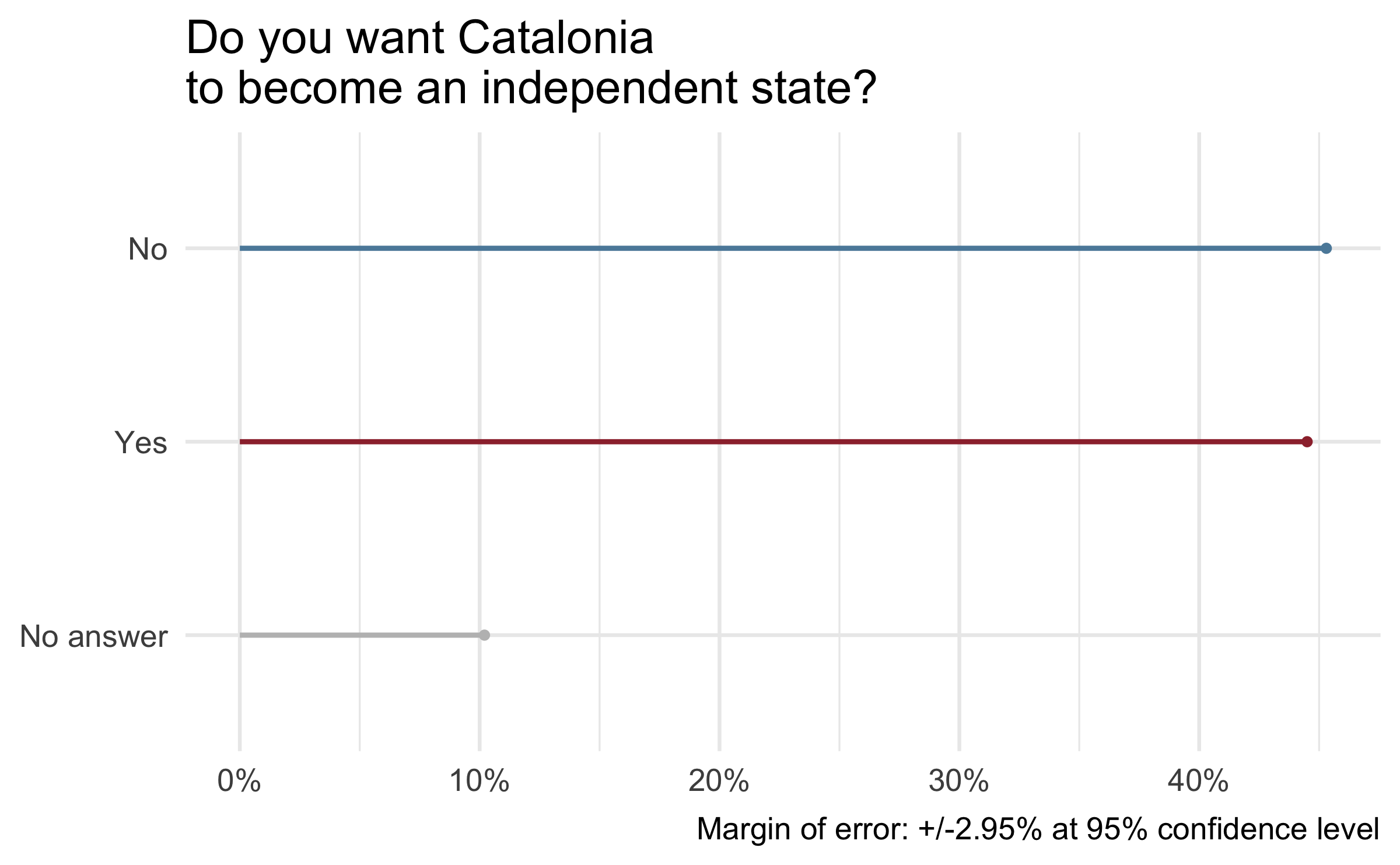

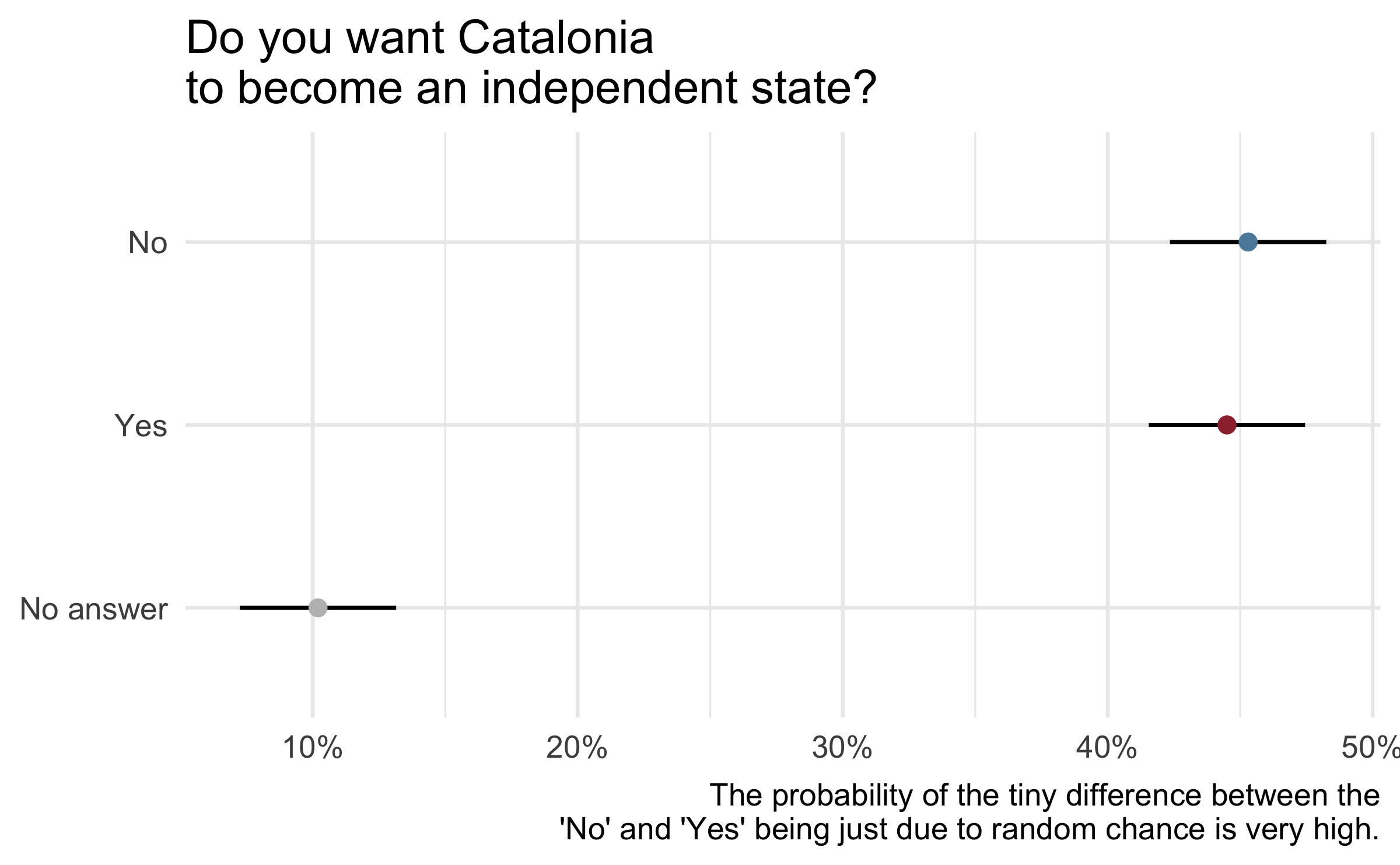

Catalan independence

On December 19, 2014, the front page of Spanish national newspaper El País read “Catalan public opinion swings toward ‘no’ for independence, says survey”.

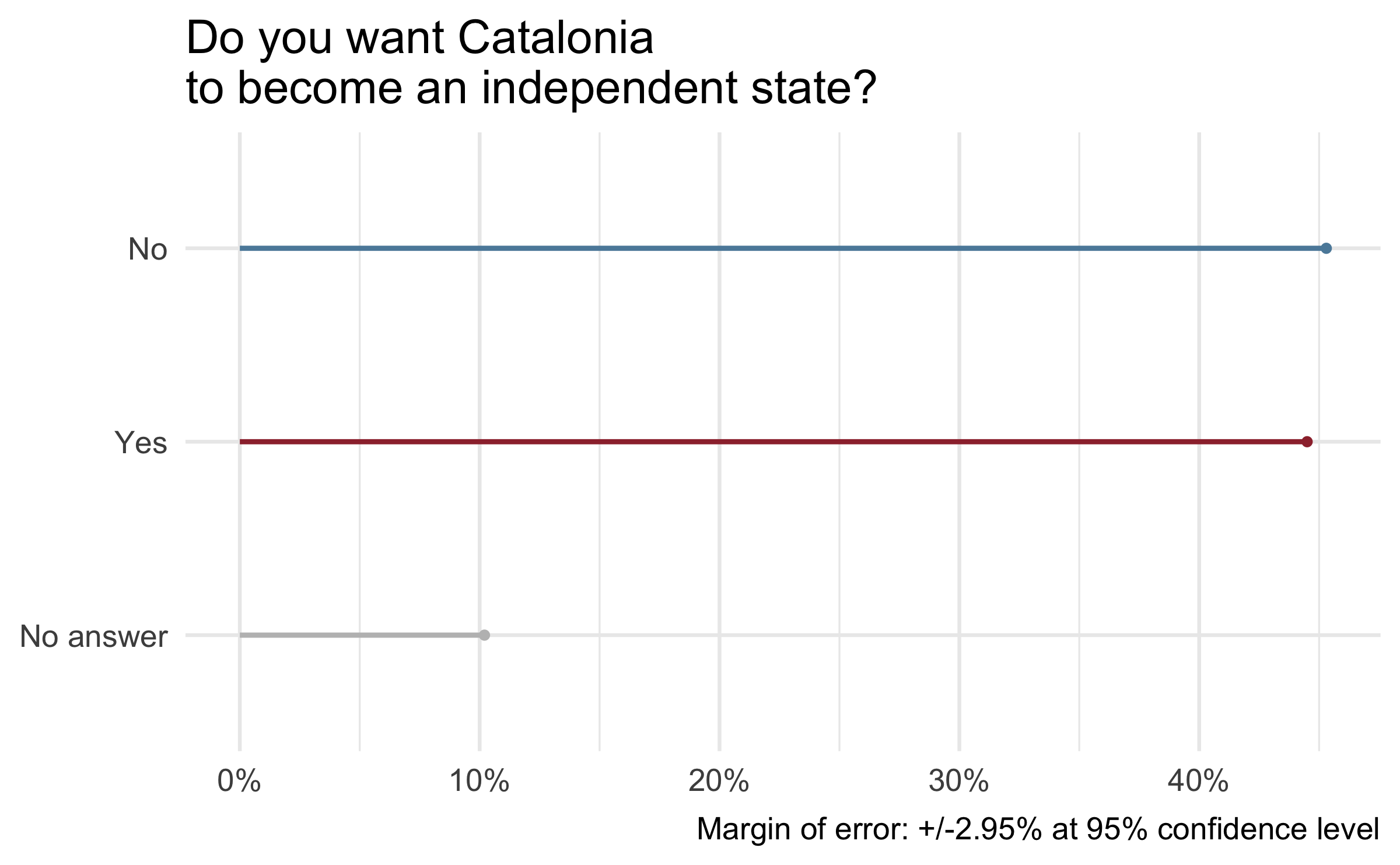

Catalan independence - with uncertainty

Algorithmic bias

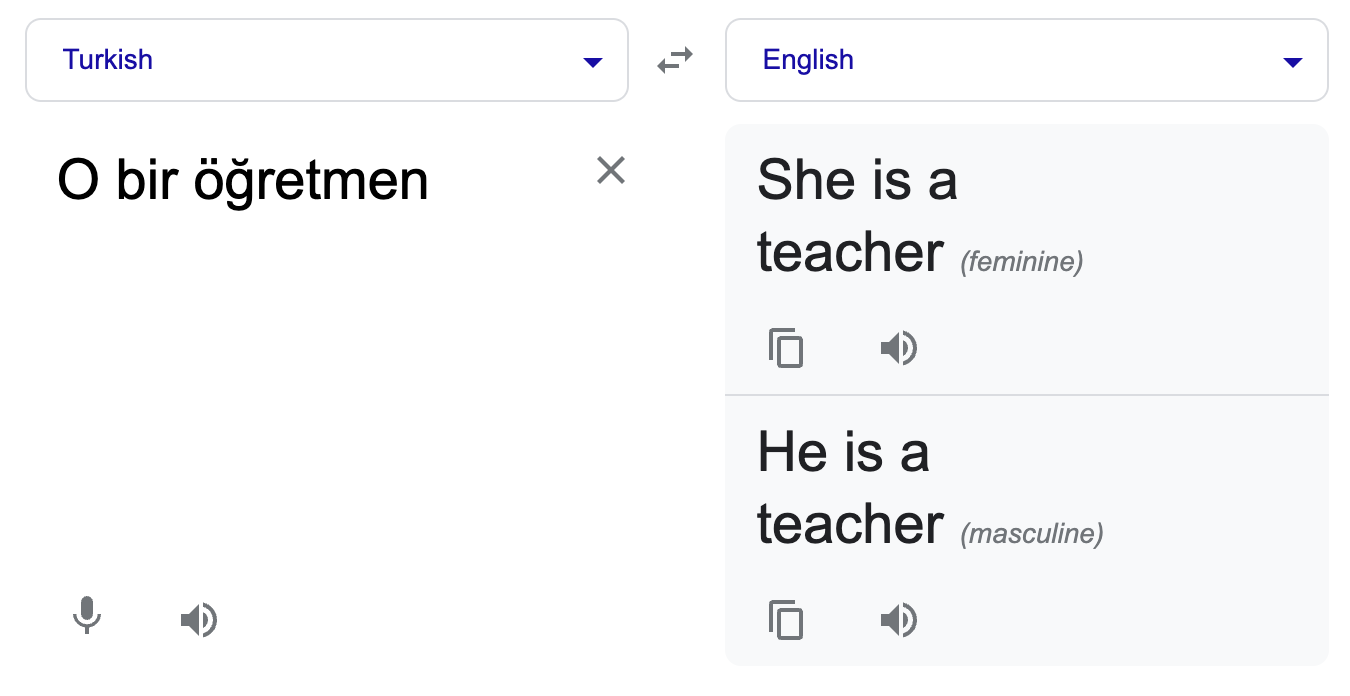

Google translate - a few years ago

Google translate - today

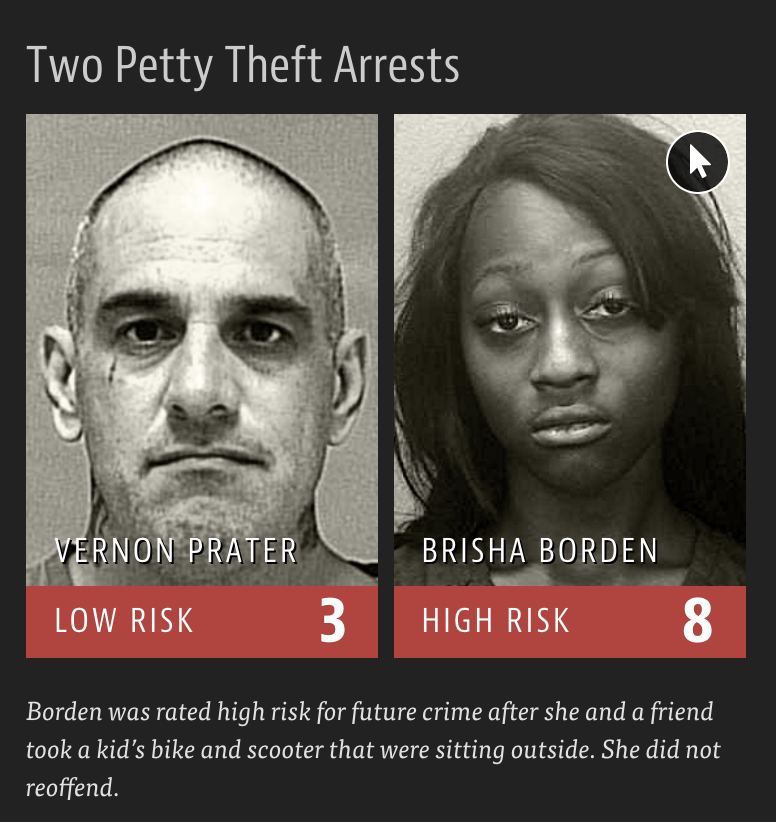

Criminal sentencing

There’s software used across the country to predict future criminals.

And it’s biased against blacks.

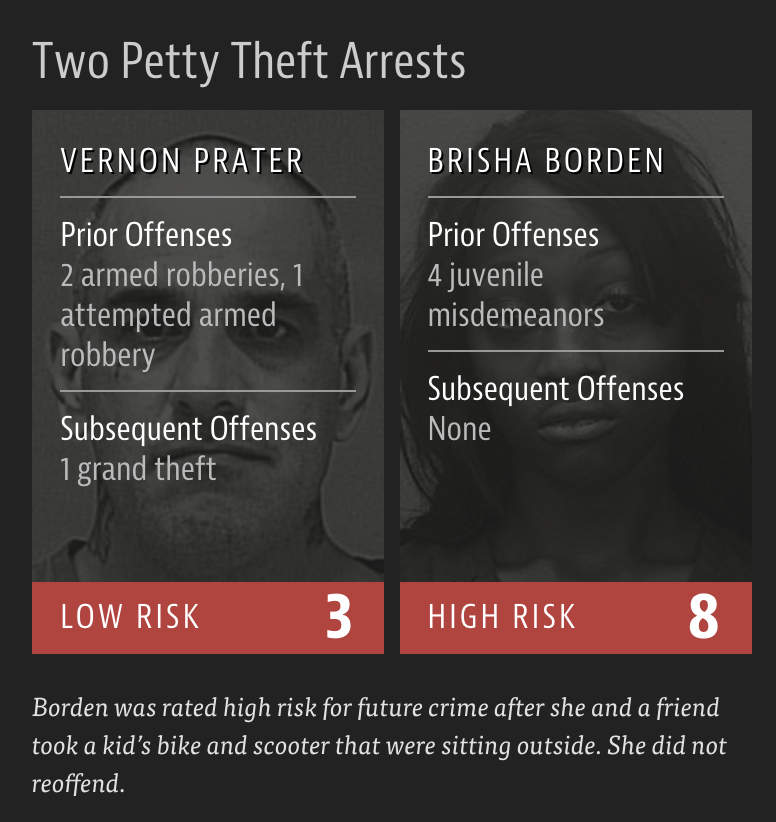

A tale of two convicts

ProPublica analysis

- Data: Risk scores assigned to more than 7,000 people arrested in Broward County, Florida, in 2013 and 2014 + whether they were charged with new crimes over the next two years.

Results:

20% of those predicted to commit violent crimes actually did

Algorithm had higher accuracy (61%) when full range of crimes taken into account (e.g. misdemeanors)

![]()

Algorithm was more likely to falsely flag black defendants as future criminals, at almost twice the rate as white defendants

White defendants were mislabeled as low risk more often than black defendants

How to write a racist AI without trying

Chat GPT

Do you use Chat GPT? How?

How, if at all, does Chat GPT (or other similar Large Language Models) reflect human bias?

How would you feel if a company use AI-assisted chatbots to respond to your product return requests? How about if your professors responded to your emails with a similar chatbot? How about if your therapist responded to your inquiries with a similar chatbot?

Data privacy

Web scraping

A data analyst received permission to post a data set that was scraped from a social media site. The full data set included name, screen name, email address, geographic location, IP (Internet protocol) address, demographic profiles, and preferences for relationships. The analyst removes name and email address from the data set in effort to deidentify it.

Why might it be problematic to post this data set publicly?

How can you store the full dataset in a safe and ethical way?

You want to make the data available so your analysis is transparent and reproducible. How can you modify the full data set to make the data available in an ethical way?

Further reading

Further reading

Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence by The White House

Machine Bias by Julia Angwin, Jeff Larson, Surya Mattu, and Lauren Kirchner

Ethics and Data Science by Mike Loukides, Hilary Mason, DJ Patil (Free Kindle download)

Weapons of Math Destruction How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil

Algorithms of Oppression How Search Engines Reinforce Racism by Safiya Umoja Noble

Why Pokémon Go’s plan to 3D-scan the world is dangerous by Loren Smith

How Charts Lie: Getting Smarter about Visual Information by Alberto Cairo

Calling Bullshit: The Art of Skepticism in a Data-Driven World by Carl Bergstrom and Jevin West

Time permitting

Project questions?

Any content questions?

Any formatting questions?

Any requests for live review?